Your AI Is Illegal

Nations and enterprises are demanding 'AI Sovereignty,' but current platforms can't deliver. Here's the playbook for building the compliance-first developer tools for this new, fragmented reality.

Note: A generated audio podcast of this episode is included below for paid subscribers.

⚡ The Signal

Geopolitics is crashing the AI party. While Silicon Valley chases AGI, governments and global enterprises are pumping trillions into a different race: AI Sovereignty. This isn't just about national pride; it’s a funded mandate. Nations from France to Japan are demanding their own infrastructure, and as a recent report highlights, everyone wants AI sovereignty, but the tools to achieve it are lagging far behind.

🚧 The Problem

The entire modern AI stack, from OpenAI to Anthropic, was built on a simple premise: your data, our cloud. This model is now fundamentally broken. Enterprises are hitting a wall, unable to move beyond small-scale tests because they can't guarantee data residency or prove compliance. Developers are caught in the middle, forced to choose between using state-of-the-art models that violate data policies or settling for less capable, on-premise alternatives. There's no clean way to deploy powerful, third-party agents within your own perimeter and maintain a bulletproof audit trail.

🚀 The Solution

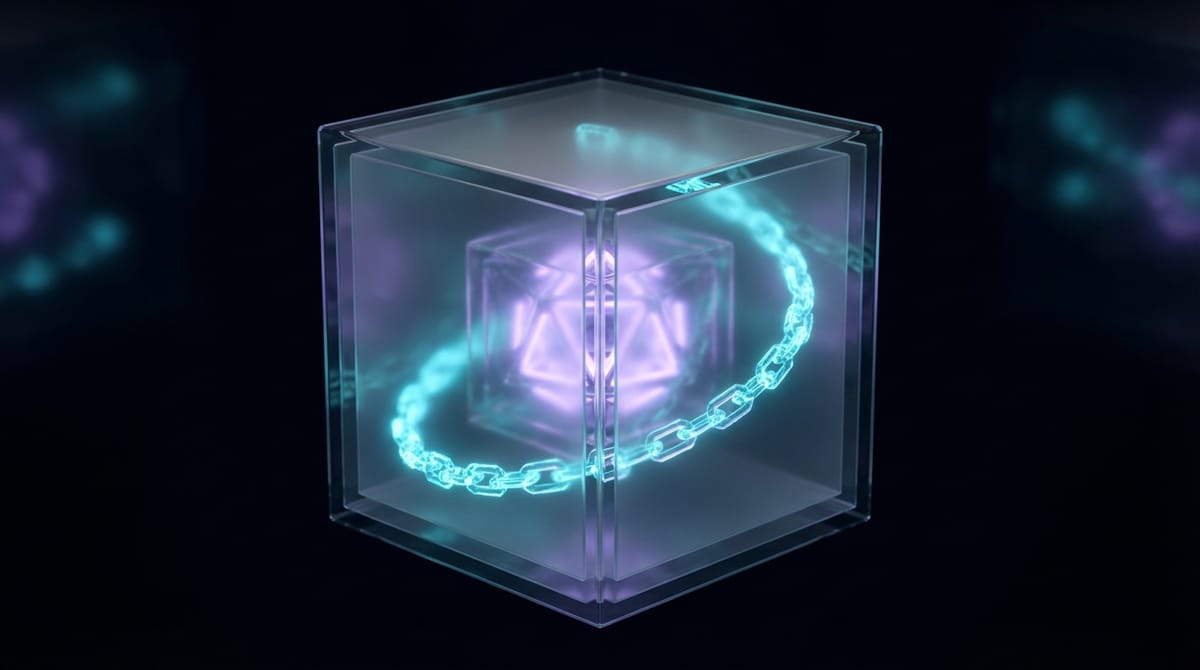

Enter StateCraft. It's not another LLM. It's a developer-first "sovereign sandbox" for building, testing, and deploying AI agents that are compliant by default. StateCraft provides a secure, containerized environment that can be deployed anywhere—private cloud, on-prem—letting you bring the models to your data, not the other way around. It wraps every agent action in a governance layer, creating an immutable, cryptographic audit trail for every single inference, decision, and data access request. You get to use the best models without sacrificing control.